Spark Basics

Suppose we have a web application hosted in an application orchestrator like kubernetes. If load in that particular application increases then we can horizontally scale our application simply by increasing the number of pods in our service.

Now let’s suppose there is heavy compute operation happening in each of the pods. Then there will be certain limit upto which these services can run because unlike horizontal scaling where you can have as many numbers of machines as required, there is limit for vertical scaling because you can’t have unlimited ram and cpu cores for each of the machines in a cluster. Distributed Computing removes this limitation of vertical scaling by distributing the processing across cluster of machines. Now, a group of machines alone is not powerful, you need a framework to coordinate work across them. Spark does just that, managing and coordinating the execution of tasks on data across a cluster of computers. The cluster of machines that Spark will use to execute tasks is managed by a cluster manager like Spark’s standalone cluster manager, Kubernetes, YARN, or Mesos.

Spark Basics

Spark is distributed data processing engine. Distributed data processing in big data is simply series of map and reduce functions which runs across the cluster machines. Given below is python code for calculating the sum of all the even numbers from a given list with the help of map and reduce functions.

from functools import reduce

a = [1,2,3,4,5]

res = reduce(lambda x,y: x+y, (map(lambda x: x if x%2==0 else 0, a)))Now consider, if instead of a simple list, it is a parquet file of size in order of gigabytes. Computation with MapReduce system becomes optimized way of dealing with such problems. In this case spark will load the big parquet file into multiple worker nodes (if the file doesn’t support distributed storage then it will be first loaded into driver node and afterwards, it will get distributed across the worker nodes). Then map function will be executed for each task in each worker node and the final result will fetched with the reduce function.

Spark timeline

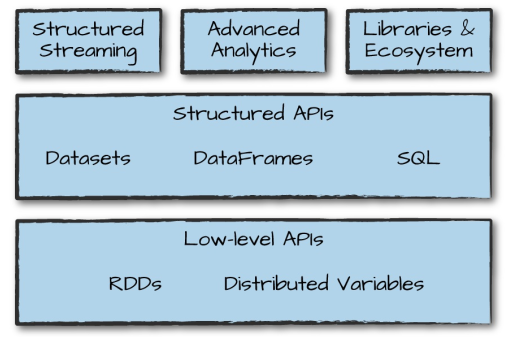

Google was first to introduce large scale distributed computing solution with MapReduce and its own distributed file system i.e., Google File System(GFS). GFS provided a blueprint for the Hadoop File System (HDFS), including the MapReduce implementation as a framework for distributed computing. Apache Hadoop framework was developed consisting of Hadoop Common, MapReduce, HDFS, and Apache Hadoop YARN. There were various limitations with Apache Hadoop like it fell short for combining other workloads such as machine learning, streaming, or interactive SQL-like queries etc. Also the results of the reduce computations were written to a local disk for subsequent stage of operations. Then came the Spark. Spark provides in-memory storage for intermediate computations, making it much faster than Hadoop MapReduce. It incorporates libraries with composable APIs for machine learning (MLlib), SQL for interactive queries (Spark SQL), stream processing (Structured Streaming) for interacting with real-time data, and graph processing (GraphX).

Spark Application

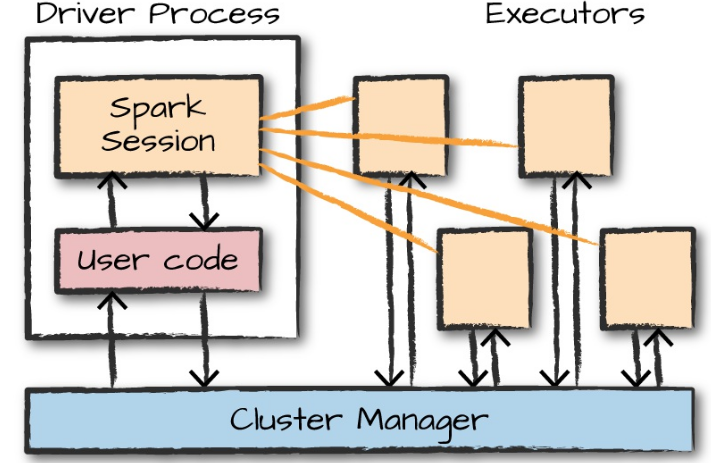

Spark Applications consist of a driver process and a set of executor processes. The driver process runs your main() function, sits on a node in the cluster. The executors are responsible for actually carrying out the work that the driver assigns them. The driver and executors are simply processes, which means that they can live on the same machine or different machines.

There is a SparkSession object available to the user, which is the entrance point to running Spark code. When using Spark from Python or R, you don’t write explicit JVM instructions; instead, you write Python and R code that Spark translates into code that it then can run on the executor JVMs.

Spark’s language APIs make it possible for you to run Spark code using various programming languages like Scala, Java, Python, SQL and R.

Spark has two fundamental sets of APIs: the low-level “unstructured” APIs (RDDs), and the higher-level structured APIs (Dataframes, Datasets).

Spark Toolsets

A DataFrame is the most common Structured API and simply represents a table of data with rows and columns. To allow every executor to perform work in parallel, Spark breaks up the data into chunks called partitions. A partition is a collection of rows that sit on one physical machine in your cluster.

If a function returns a Dataframe or Dataset or Resilient Distributed Dataset (RDD) then it is a transformation and if it doesn’t return anything then it’s an action. An action instructs Spark to compute a result from a series of transformations. The simplest action is count.

Transformation are of types narrow and wide. Narrow transformations are those for which each input partition will contribute to only one output partition. Wide transformation will have input partitions contributing to many output partitions.

Sparks performs a lazy evaluation which means that Spark will wait until the very last moment to execute the graph of computation instructions. This provides immense benefits because Spark can optimize the entire data flow from end to end.

Spark-submit

References

- https://spark.apache.org/docs/latest/

- spark: The Definitive Guide by Bill Chambers and Matei Zaharia